A Neural Pipeline System for Natural Language Generation

July 2019

Henry Elder, ADAPT Centre

Dublin City University

Supervisors: Jennifer Foster, Alexander O'Connor

Overview

-

Introduction

-

Research Methods

-

Current Progress

-

Conclusion

Natural Language Generation

https://www.vphrase.com/blog/natural-language-generation-explained/

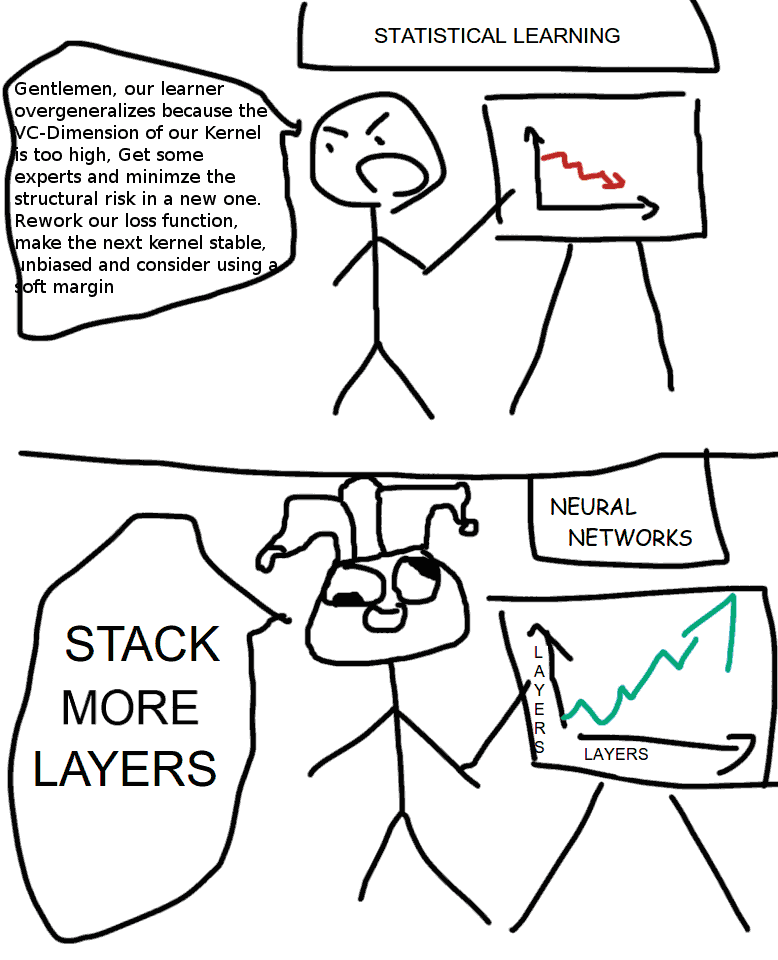

Neural NLG

Stephen Merity. 2016. “Peeking into the Neural Network Architecture Used for Google’s Neural Machine Translation.” https://smerity.com/articles/2016/google_nmt_arch.html

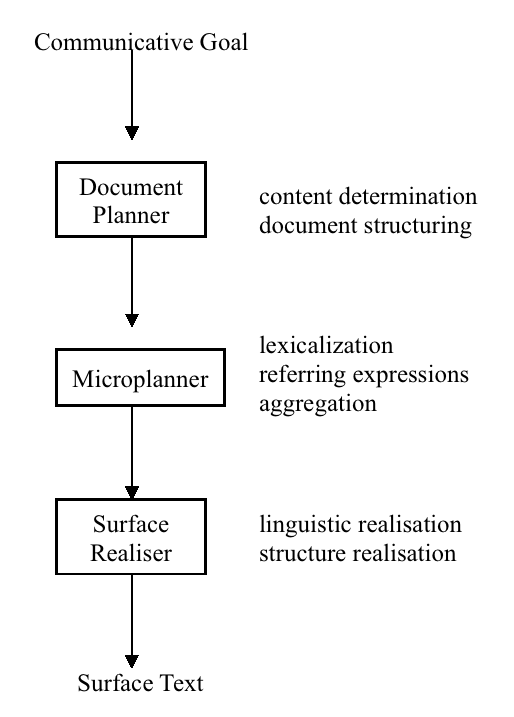

Traditional Pipeline Systems

Ehud Reiter and Robert Dale. 2000. "Building Natural Language Generation Systems"

Requirements of NLG systems

-

Adequacy

- Utterances include all relevant information

-

Fluency

- Information expressed correctly and fluently

Problems with Neural NLG

- Repetition

- I am a famous twitch streamer streamer streamer streamer

- Contradiction

- I do not like to read, but I do like to read.

- Ignores rare words

- Hallucinates facts

- My dream job is looking for a new job.

- Adequacy and missing information

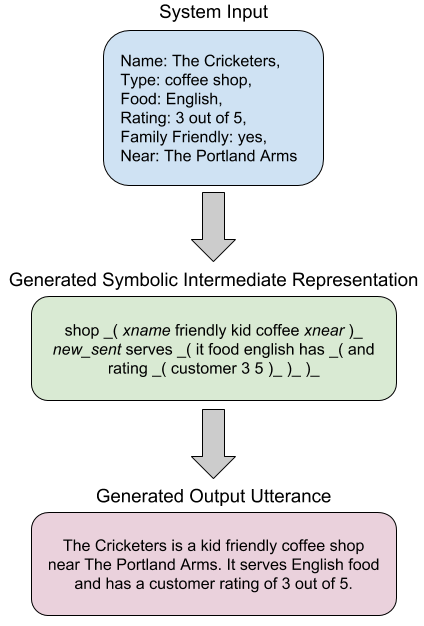

Our Proposed Approach

-

What to say

- Content Selection

-

How to say it

- Surface Realization

Research Questions

- What is the best intermediate representation to use for surface realization?

-

How can we generate intermediate representations as part of the content selection step?

Research Methods

Modelling

Source unknown

Creating Datasets

- Automatically constructed e.g. by scraping the web

- Crowdsourcing

- Programmatically created e.g. by parsing

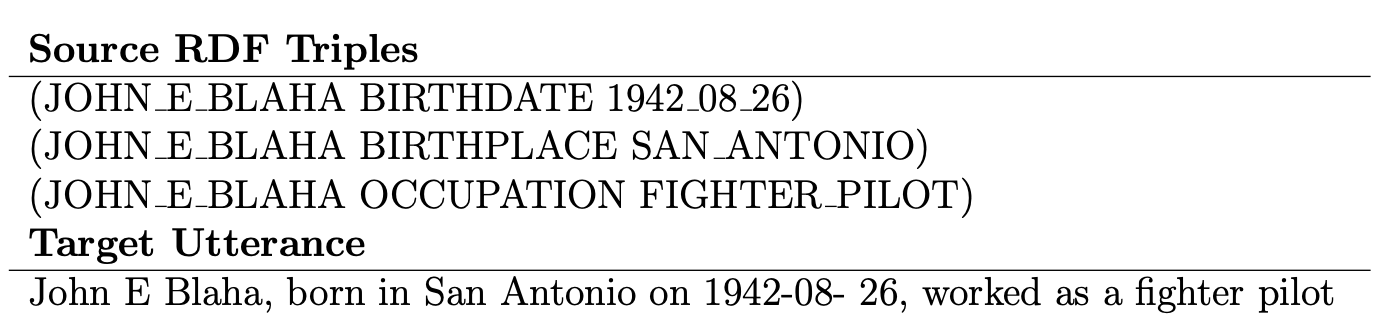

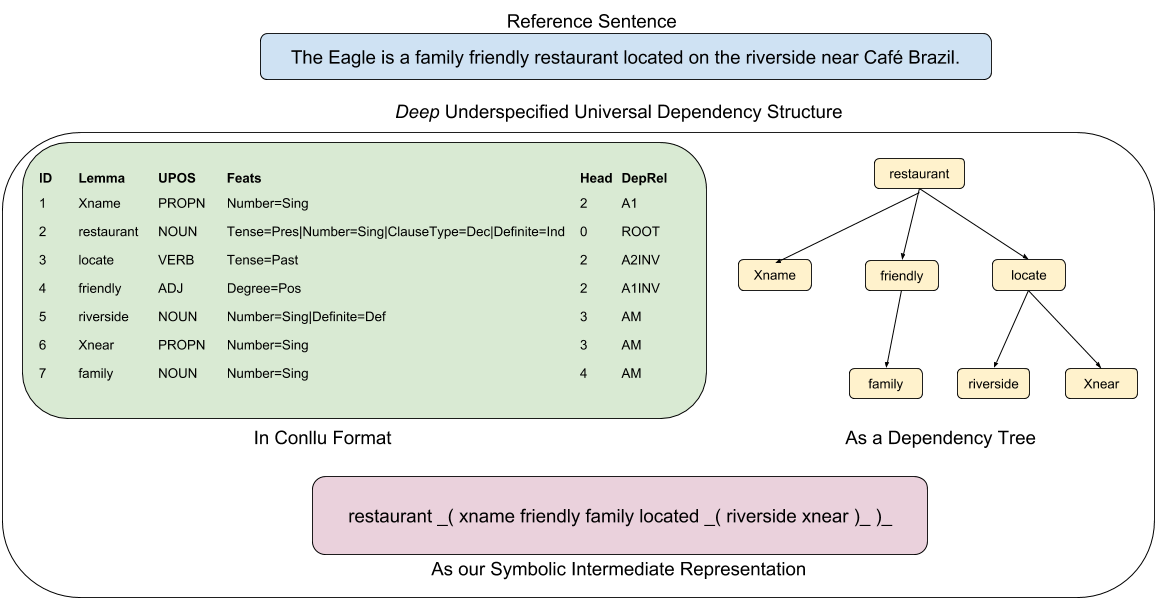

Intermediate Representation

You will find this local gem near Cotto in the riverside area.

Evaluation

-

Automated metrics - Not that informative or useful!

-

N-gram overlap e.g. BLEU

-

Perplexity, edit distance

-

-

Human evaluation

-

Count based metrics

-

Ranking

-

Current Progress

WebNLG Challenge

Results: Top scores in all automated and human evaluation metrics on the seen subtask out of 9 systems. But relatively worse than other systems on unseen subtask

Lessons learned: Baseline seq2seq model very strong performance, especially given graph like nature of inputs

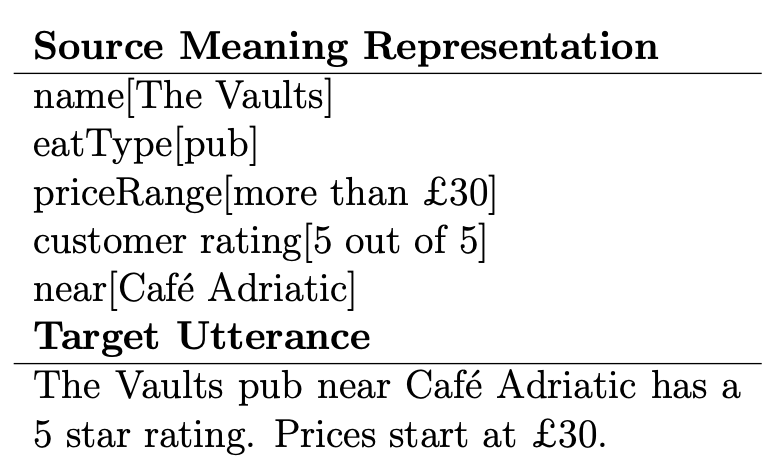

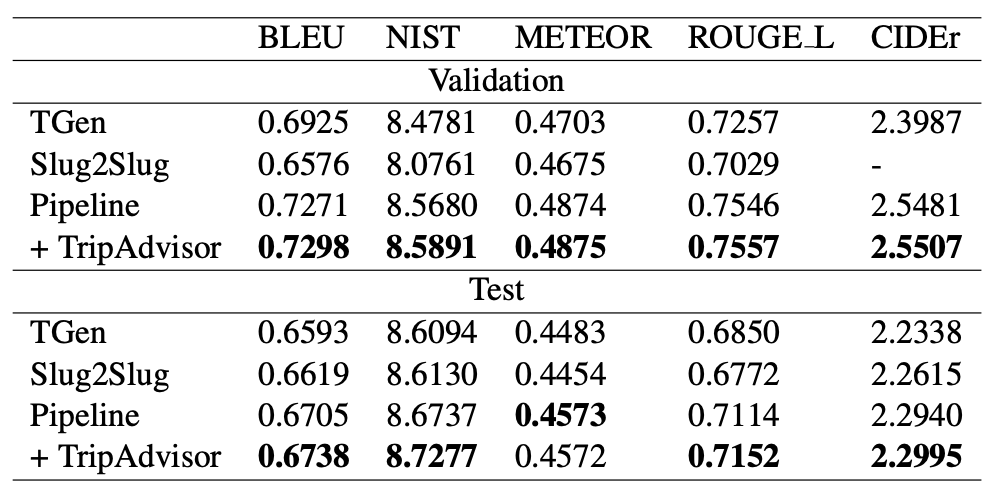

E2E NLG Challenge

Results: Joint submission with Harvard NLP got top METEOR, ROUGE, and CIDEr scores out of 60 systems. Own diversity enhancing approach performed worse than baseline

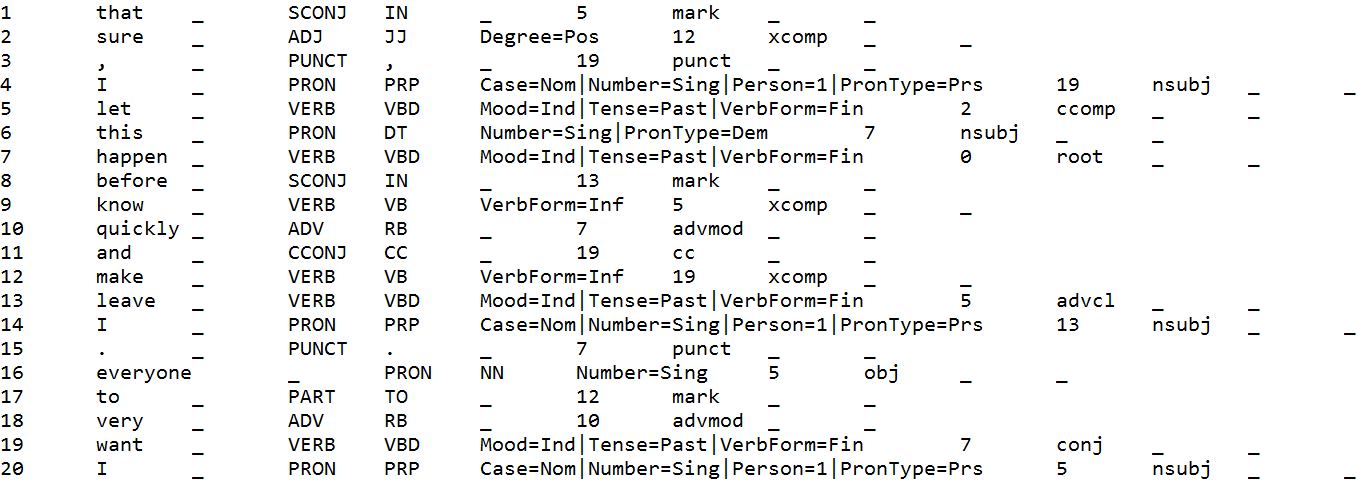

Surface Realization Shared Task

Results: Top scores in all automated metrics and first for readability in human evaluation for English out of 8 system. Only team to enter the deep track

Target Sentence: This happened very quickly, and I wanted to make sure that I let everyone know before I left.

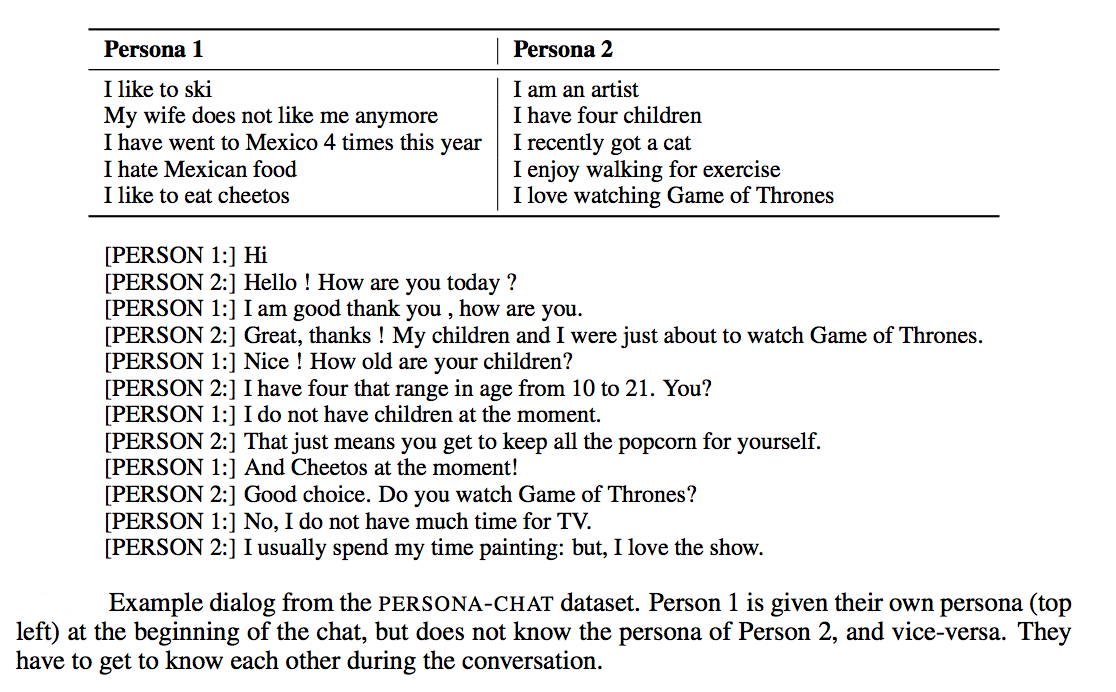

ConvAI2

Results: Ranked second on the automated evaluation leaderboard out of 23 systems. But performed worse in human evaluation, ranking 6th

What can we do to improve the reliability of neural-based NLG?

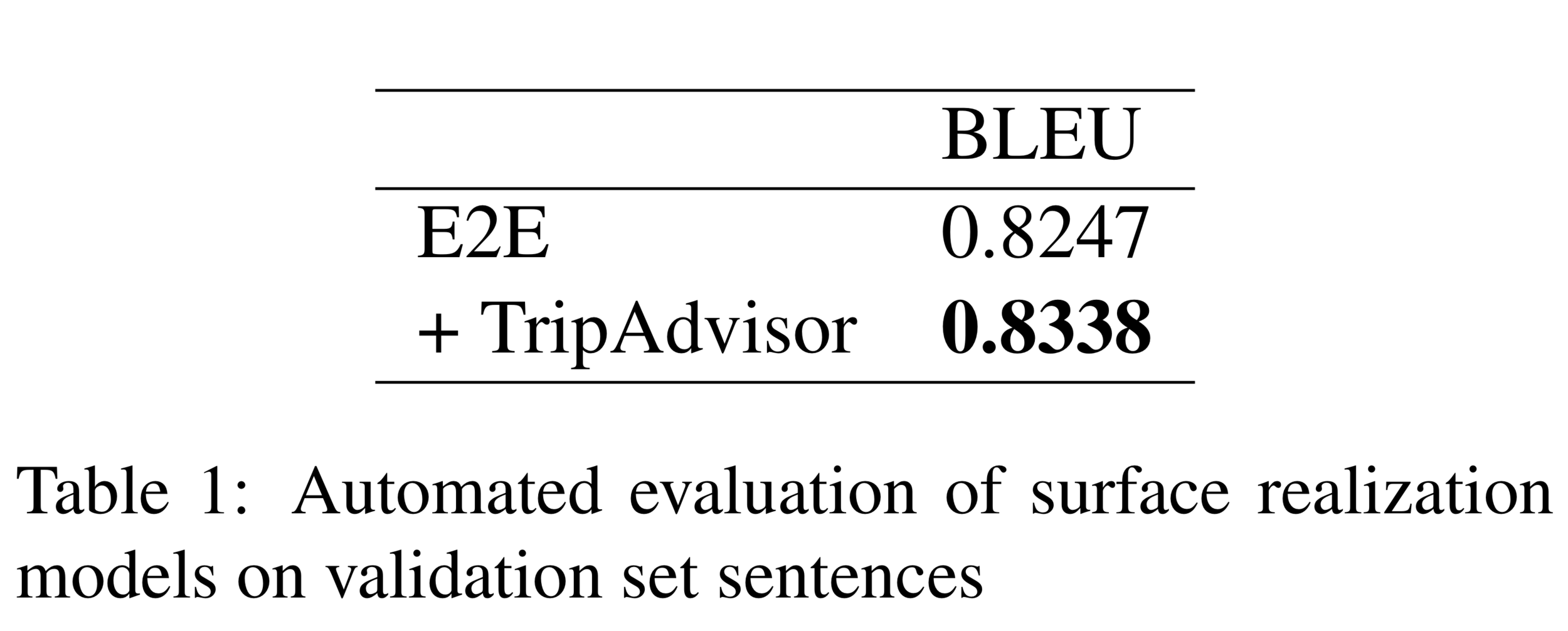

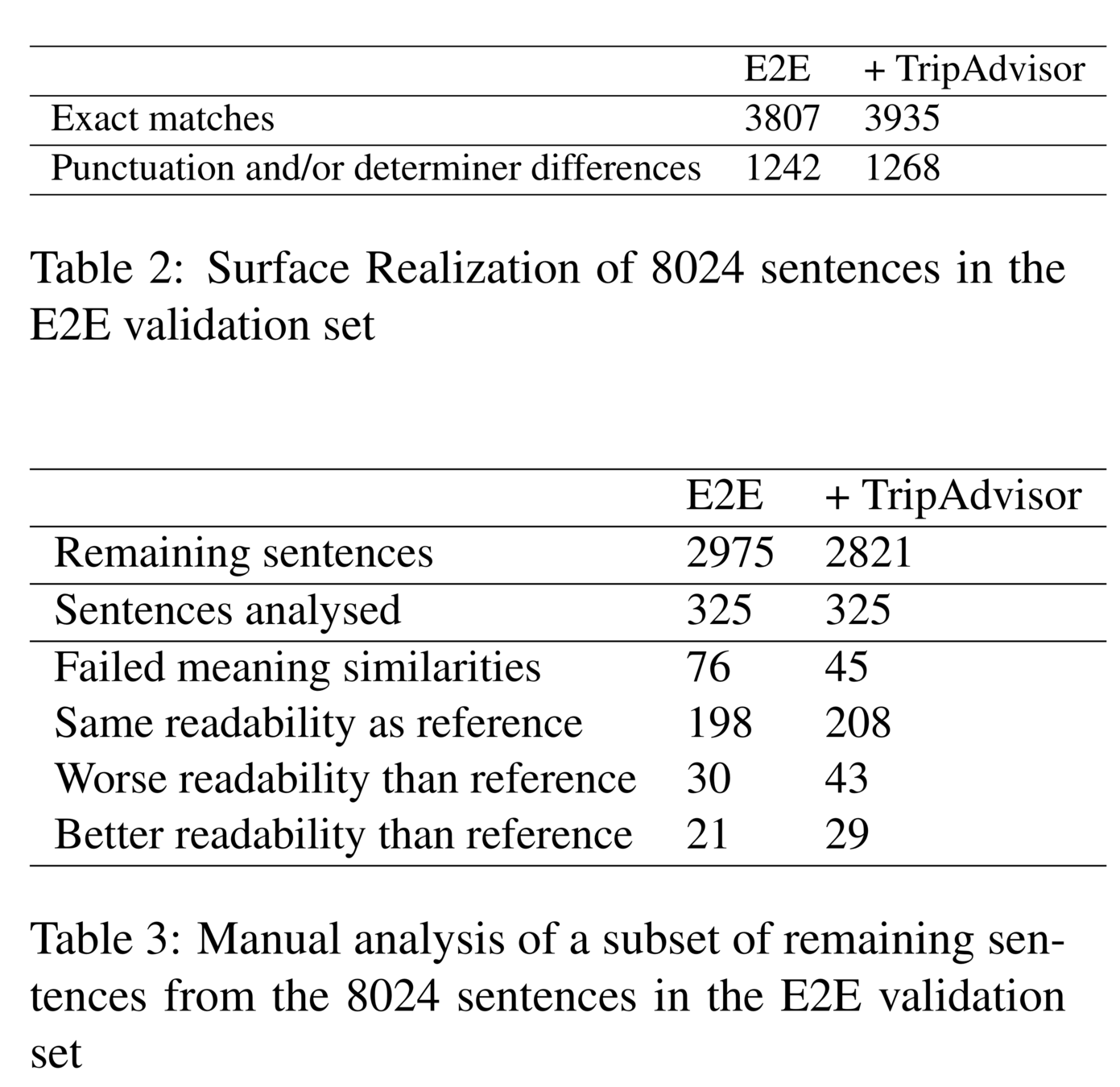

E2E automated evaluation

Takeaway

Multi-stage neural NLG systems are capable of improving the quality of generated utterances (in English).